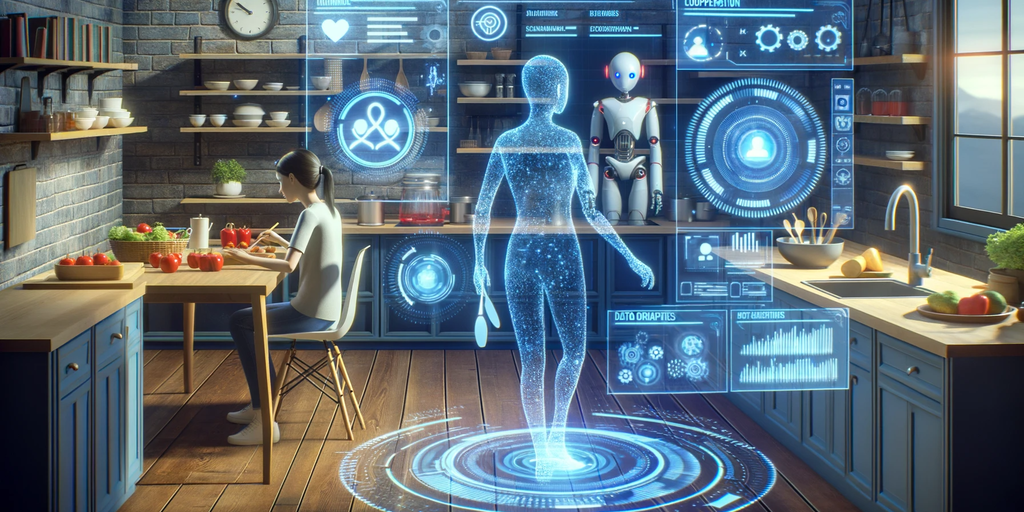

Meta AI has unveiled Habitat 3, a new simulator that advances research into AI agents that can collaborate and assist humans in virtual 3D environments. The platform, presented by the team at Facebook AI Research (FAIR), allows high-fidelity simulation of human avatars and enables real-time interaction between humans and AI through a virtual interface.

According to the researchers, Habitat 3 stands out for its ability to generate diverse and realistic virtual humans that can navigate indoors and manipulate objects nearly as fast as non-human agents.

Calling it “a simulation platform for studying collaborative human-robot tasks in home environments,” FAIR wrote in an accompanying research paper that “Habitat 3.0 offers contributions across three dimensions.”

The ultimate goal is what Meta calls “embodied AI,” where technology takes an active role in the physical world through wearables and robots.

With Habitat 3.0, Meta has built a platform for large-scale training of human-robot interaction in everyday tasks like tidying up a living room to preparing a meal in the kitchen.

To benchmark these collaborative AI capabilities, the team designed two types of interactive tasks: social navigation, where a robot must locate and follow an avatar, and social rearrangement, which requires a robot and avatar to clean up a room together. In its experiments, the researchers found that the virtual robots developed policies and learned behaviors to efficiently collaborate with avatars, such as giving them space when navigating together. The social rearrangement experiments also showed that learned policies could improve work efficiency for unseen avatar partners.

“We’ve made considerable progress toward our vision of socially intelligent robots since open sourcing Habitat 1.0 in 2019,” Meta remarked in its announcement. However, there’s still important work to do, and tools like Habitat 3 can encourage greater engagement in this important research direction.

In the future, Meta says it expects to“focus on deploying the models learned in simulation into the physical world so we can better gauge their performance.”

Complementing this simulator is the Habitat Synthetic Scenes Dataset (HSSD-200). This artist-authored 3D dataset boasts over 18,000 objects across 466 semantic categories in 211 scenes. It’s a tool that could be used to train navigation agents with unparalleled precision, offering a more realistic training environment for robots.

Meta also introduced HomeRobot, another platform designed to bridge the gap between simulation and the real world. HomeRobot allows robots to perform tasks in both simulated and physical-world environments.

These advancements have broader implications, especially when viewed through the lens of Meta’s long-term goals. The Metaverse, a collective virtual shared space, is imagined as a convergence of virtually enhanced physical reality and interactive digital spaces. With tools like Habitat 3.0, the line between the digital and physical worlds blurs, allowing for more immersive and interactive experiences .

Furthermore, Meta’s dedication to AI is evident in their significant investments in the field. The tech giant is a leading contributor to platforms dedicated to natural language processing, showcasing their commitment to advancing the domain. Its LlaMA LLM powers many of the open-source custom LLMs currently available, and it is the corporation with the most commits made across open-source AI projects Hugging Face, followed by Microsoft.

Habitat 3 promises to unlock new research into assistive AI agents that can cooperate with people in virtual worlds, aligned with Meta’s vision for collaborative AI in augmented and virtual reality. The ability to rapidly iterate and train policies in high-fidelity simulation before deployment to the real world could accelerate the development of even more useful AI capabilities.